🤖 Working with LLMs – Introduction to Language Models

Large Language Models (LLMs) are a type of artificial intelligence trained on massive amounts of text data to understand and generate human-like language. Think of them as extremely sophisticated pattern-matching systems that have read billions of documents.

📺 Tutorial Videos

- 🤖 Working with LLMs – Part 1: Introduction to Language Models

—

—

What Are LLMs and Generative AI?

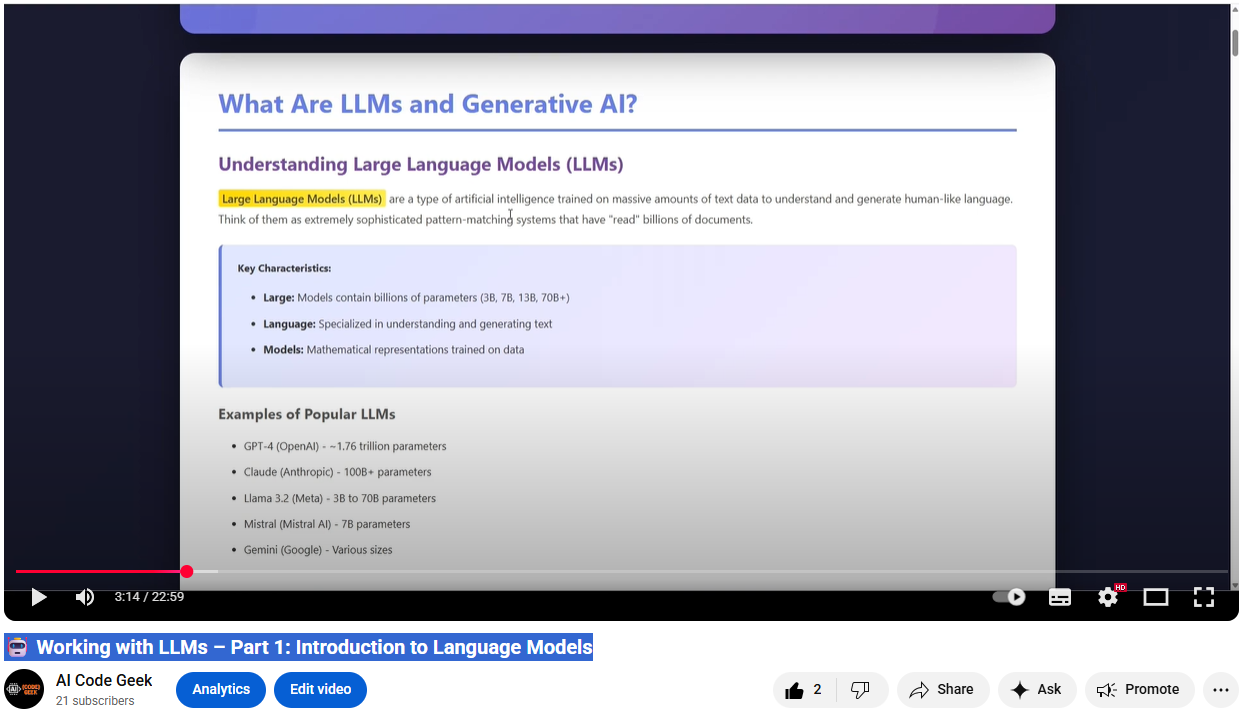

Understanding Large Language Models (LLMs)

Large Language Models (LLMs) are a type of artificial intelligence trained on massive amounts of text data to understand and generate human-like language. Think of them as extremely sophisticated pattern-matching systems that have “read” billions of documents.

Key Characteristics:

- Large: Models contain billions of parameters (3B, 7B, 13B, 70B+)

- Language: Specialized in understanding and generating text

- Models: Mathematical representations trained on data

Examples of Popular LLMs

- GPT-4 (OpenAI) - ~1.76 trillion parameters

- Claude (Anthropic) - 100B+ parameters

- Llama 3.2 (Meta) - 3B to 70B parameters

- Mistral (Mistral AI) - 7B parameters

- Gemini (Google) - Various sizes

What is Generative AI?

Generative AI is a broader category of artificial intelligence that can create new content based on patterns learned from training data. LLMs are a specific type of generative AI focused on text.

| Type | What It Generates | Examples |

|---|---|---|

| Text Generation | Articles, code, emails, stories | GPT-4, Claude, Llama |

| Image Generation | Photos, artwork, designs | DALL-E, Midjourney, Stable Diffusion |

| Audio Generation | Music, voice, sound effects | MusicLM, ElevenLabs |

| Video Generation | Video clips, animations | Sora, Runway |

| Code Generation | Software, scripts, functions | GitHub Copilot, CodeLlama |

How LLMs Work: The Basics

Training Process

- Data Collection: Gather billions of text documents

- Tokenization: Break text into smaller pieces (tokens)

- Pattern Learning: Neural network learns relationships

- Fine-tuning: Adjust model for specific tasks

Generation Process

User Input: "Write a Python function to..."

↓

Tokenization: Break into tokens

↓

Model Processing: Predict next likely tokens

↓

Generation: Create response token by token

↓

Output: Complete Python function

What Makes Them “Smart”

- 🧠 Context Understanding: Remember earlier parts of conversation

- 🔍 Pattern Recognition: Apply learned patterns to new situations

- 💭 Reasoning: Perform multi-step logical thinking

- ✨ Creativity: Combine concepts in novel ways

Cloud-Based LLMs vs Local Installed LLMs

Cloud-Based LLMs

AI models hosted on remote servers accessed via the internet through APIs.

Your Application

↓ (HTTPS Request)

Internet

↓

Cloud Provider Servers

↓

GPU Clusters Running the Model

↓ (HTTPS Response)

Your Application Receives Result

| Provider | Model | Access Method | Pricing |

|---|---|---|---|

| OpenAI | GPT-4, GPT-3.5 | REST API | ~$0.01-0.03/1K tokens |

| Anthropic | Claude 3 Opus/Sonnet | REST API | ~$0.015/1K tokens |

| Gemini Pro | REST API | Free tier + Pay-per-use |

Cloud-Based LLMs: Advantages & Disadvantages

✅ Advantages

- Cutting-edge quality

- Zero infrastructure

- Instant updates

- Scalability

- Easy integration

- No maintenance

❌ Disadvantages

- Ongoing costs

- Privacy concerns

- Internet dependency

- Rate limits

- Vendor lock-in

- Network latency

When to Use Cloud-Based LLMs:

- Building production applications for many users

- Need the absolute best AI quality

- Don’t have powerful local hardware

- Budget allows for API costs

Local Installed LLMs

AI models downloaded and running entirely on your own computer or server.

Your Application

↓ (Local API Call - localhost)

Ollama Service (Running on Your Machine)

↓

LLM Model (Loaded in RAM/GPU)

↓

Your Application Receives Result

| Platform | Description | Models Available |

|---|---|---|

| Ollama | Docker-like platform for running LLMs | Llama, Mistral, Phi, Gemma, CodeLlama |

| LM Studio | GUI application for LLM management | 100+ models from HuggingFace |

| GPT4All | Desktop app for local LLMs | GPT-J, Llama, MPT, Falcon |

Local LLMs: Advantages & Disadvantages

✅ Advantages

- Complete privacy

- Zero ongoing costs

- Offline capability

- No rate limits

- Full control

- Low latency

❌ Disadvantages

- Hardware requirements

- Quality trade-off

- Setup complexity

- Storage space (2-80GB)

- Maintenance needed

- Single user focus

When to Use Local LLMs:

- Privacy is critical (medical, legal, personal data)

- Want zero API costs

- Need offline functionality

- Building personal tools or prototypes

- Have decent hardware (16GB+ RAM recommended)

Comparison Summary

| Feature | Cloud-Based | Local Installed |

|---|---|---|

| Cost | Pay-per-use ($) | Free after setup |

| Privacy | Data sent externally | Complete privacy |

| Quality | Best available (GPT-4) | Good (Llama 3.2, Mistral) |

| Setup | Minutes (API key) | 30-60 minutes |

| Hardware | None required | 8-32GB RAM, GPU helps |

| Internet | Required | Optional |

| Latency | 1-5 seconds | 0.5-3 seconds |

Working with LLMs: REST APIs

REST API (Representational State Transfer API) is a web-based interface that allows applications to communicate with services over HTTP/HTTPS.

Core Concepts

- Endpoint: URL where the API is accessed

- HTTP Methods: Actions you can perform (GET, POST, PUT, DELETE)

- Request: Data you send to the API

- Response: Data the API sends back

- Authentication: API keys or tokens to verify identity

OpenAI API Example

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "Explain quantum computing"

}

]

}'

Ollama REST API (Local)

Ollama provides a local REST API that works similarly to cloud APIs but runs entirely on your machine.

Chat Endpoint

curl http://localhost:11434/api/chat -d '{

"model": "llama3.2",

"messages": [

{

"role": "user",

"content": "Why is the sky blue?"

}

]

}'

Generate Endpoint

curl http://localhost:11434/api/generate -d '{

"model": "llama3.2",

"prompt": "Write a haiku about coding",

"stream": false

}'

Ollama API Endpoints

| Endpoint | Method | Purpose |

|---|---|---|

/api/generate |

POST | Simple text generation |

/api/chat |

POST | Conversational chat |

/api/tags |

GET | List available models |

/api/pull |

POST | Download a model |

What are SDKs?

SDK (Software Development Kit) is a collection of tools, libraries, and code that makes it easier to use an API in a specific programming language.

Why Use SDKs Instead of Raw APIs?

- ✅ Simpler Code: Write less boilerplate

- ✅ Type Safety: Better error checking

- ✅ Auto-completion: IDE support

- ✅ Error Handling: Built-in retry logic

OpenAI Python SDK

Installation

pip install openai

Basic Usage

from openai import OpenAI

# Initialize client

client = OpenAI(api_key="your-api-key")

# Simple chat completion

response = client.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "What is machine learning?"}

]

)

print(response.choices[0].message.content)

Streaming Response

stream = client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": "Tell me a story"}],

stream=True

)

for chunk in stream:

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="")

Anthropic Python SDK

Installation

pip install anthropic

Basic Usage

import anthropic

# Initialize client

client = anthropic.Anthropic(api_key="your-api-key")

# Create message

message = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1024,

messages=[

{"role": "user", "content": "Explain REST APIs simply"}

]

)

print(message.content[0].text)

Ollama Python SDK

Installation

pip install ollama

Basic Usage

import ollama

# Simple chat

response = ollama.chat(

model='llama3.2',

messages=[

{

'role': 'user',

'content': 'Why is the ocean salty?'

}

]

)

print(response['message']['content'])

Streaming Response

stream = ollama.chat(

model='llama3.2',

messages=[{'role': 'user', 'content': 'Tell me about Python'}],

stream=True

)

for chunk in stream:

print(chunk['message']['content'], end='', flush=True)

Multi-turn Conversation with Ollama

import ollama

messages = []

# First message

messages.append({'role': 'user', 'content': 'Hello!'})

response = ollama.chat(model='llama3.2', messages=messages)

messages.append({

'role': 'assistant',

'content': response['message']['content']

})

# Second message (with context)

messages.append({'role': 'user', 'content': 'What is Python?'})

response = ollama.chat(model='llama3.2', messages=messages)

print(response['message']['content'])

List Available Models

import ollama

models = ollama.list()

for model in models['models']:

print(f"{model['name']} - {model['size'] / 1e9:.2f} GB")

JavaScript/Node.js SDKs

OpenAI JavaScript SDK

import OpenAI from 'openai';

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY

});

async function main() {

const completion = await openai.chat.completions.create({

model: "gpt-4",

messages: [

{ role: "user", content: "Explain async/await in JavaScript" }

]

});

console.log(completion.choices[0].message.content);

}

main();

Ollama JavaScript SDK

import ollama from 'ollama';

// Simple chat

const response = await ollama.chat({

model: 'llama3.2',

messages: [

{ role: 'user', content: 'What is Node.js?' }

]

});

console.log(response.message.content);

// Streaming

const stream = await ollama.chat({

model: 'llama3.2',

messages: [{ role: 'user', content: 'Explain promises' }],

stream: true

});

for await (const chunk of stream) {

process.stdout.write(chunk.message.content);

}

REST API vs SDK Comparison

Using Raw REST API (curl)

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [{"role": "user", "content": "Hello"}]

}'

Using Python SDK

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": "Hello"}]

)

The SDK version is:

- Shorter and cleaner

- Easier to read and maintain

- Handles authentication automatically

- Provides better error messages

- Includes type hints and autocomplete

Key Concepts Summary

-

🤖 LLMs and Generative AI: AI models trained on text to understand and generate language. Parameters (3B, 7B, 70B) indicate model size and capability.

-

☁️ Cloud vs Local LLMs: Cloud: Best quality, no hardware, costs per use. Local: Private, free, requires powerful computer.

-

🔌 REST APIs: Web interface for sending requests and receiving responses via HTTP/HTTPS endpoints.

-

📦 SDKs: Language-specific libraries that simplify API usage with cleaner code and better error handling.

Resources

| Resource | Link |

|---|---|

| 📖 OpenAI API Documentation | platform.openai.com/docs |

| 🧬 Anthropic Claude API | docs.anthropic.com |

| 🦙 Ollama Documentation | github.com/ollama/ollama |

| 🐍 Ollama Python SDK | github.com/ollama/ollama-python |